Technical Overview

Schematic Overview

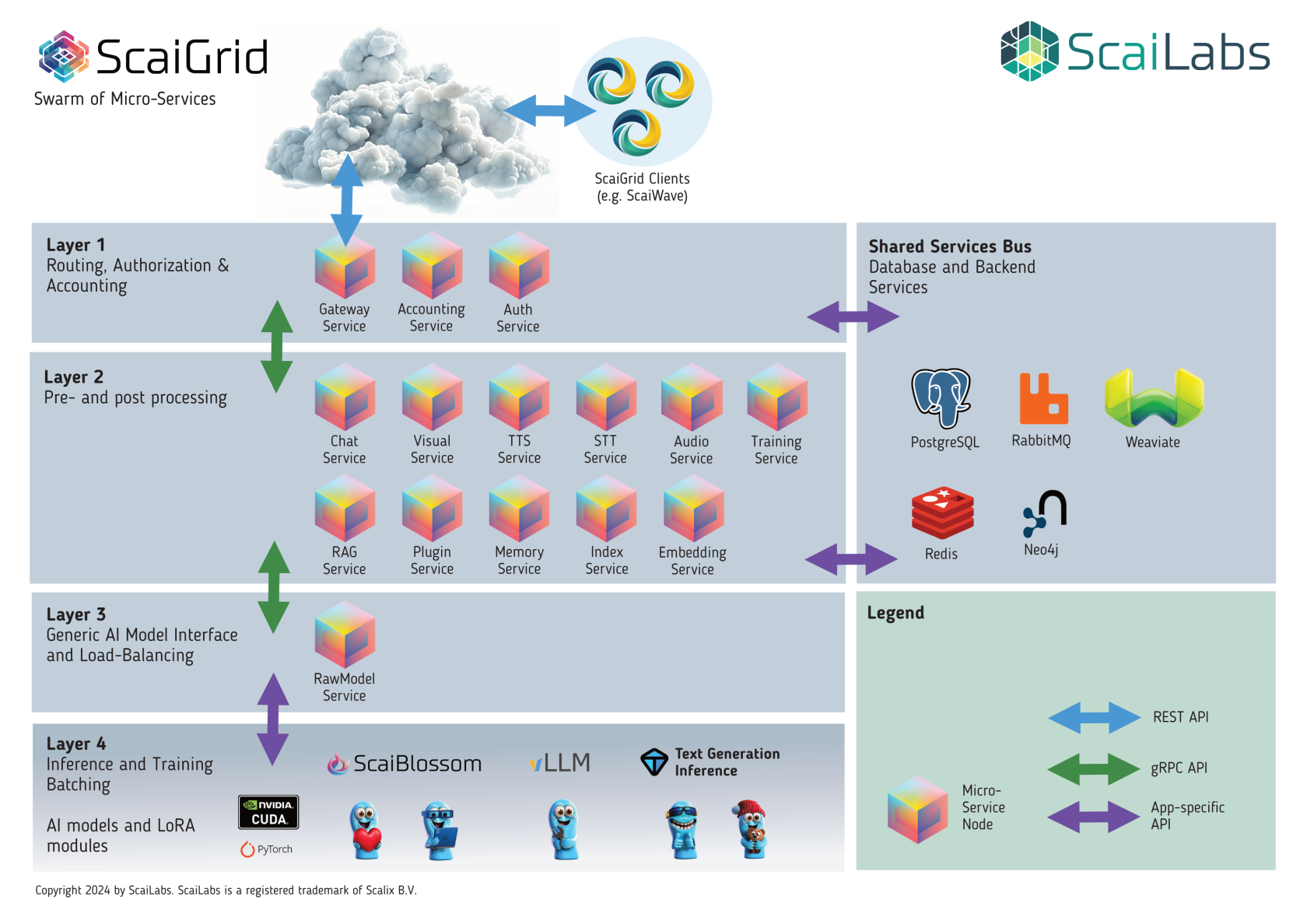

ScaiGrid runs on a modern, containerized infrastructure using a scalable micro-services architecture. Micro-services can be scaled "horizontally" if demand increases and can be scaled back in case of low load.

The picture above is a schematic representation of the ScaiGrid infrastructure. In case of a minimum setup, there is one single Micro-Service instance for every service listed above.

- Layer 1: All incoming requests are processed via the Gateway service. The Gateway Service supports two API's:

- ScaiGrid's fully-featured OpenAPI based API.

- An OpenAI compatible REST API.

- The Gateway service authenticates all request via the Auth service. System usage will be maintained using the Accounting Service.

- Layer 2: This layer pre- and processes all requests. Pre-processing can include interfacing with external plugins using the Plugin Service for example, or database querying for extra relevant information using the RAG Service. The list here is a non-exclusive list of the micro-services on this layer.

- Layer 3: This layer is ScaiGrid's universal interface to all AI models hosted within ScaiGrid. The RawModelService interfaces with our AI models using one of three technologies in Layer 4.

- Layer 4:

Types of deployment

ScaiGrid knows the following types of deployment:

Platform technical requirements

Containerized Deployment Supported Platforms

- Kubernetes 1.24 or newer

- Docker Engine 23.0 or newer

Virtualized Deployment Supported Hypervisors

- VMWare ESXi 7.0

- VMWare ESXi 8.0

- Proxmox VE 7.4

- Proxmox VE 8.1

Please notice that nodes that will be able to run inference and training loads require NVIDIA GPU availability

Cloud Compatibility

- Azure Kubernetes Service (AKS)

- Deployment region must support NVIDIA GPU-enabled instances

- Amazon EKS

- Deployment region must support NVIDIA GPU-enabled instances

- Akamai Managed Kubernetes

- Deployment region must support NVIDIA GPU-enabled instances

- OVHcloud Managed Kubernetes

- Deployment region must support NVIDIA GPU-enabled instances

Supported GPUs

- NVidia GeForce RTX 4090

- NVidia A30

- NVidia A6000

- NVidia A100

- Nvidia P100

Customer Virtual Appliance Requirements

- VMWare ESXi 7.0

- VMWare ESXi 8.0

- Proxmox VE 7.4

- Proxmox VE 8.1

For local embedding support, one or more supported GPUs are required:

- NVidia GeForce RTX 4090

- NVidia A30

- NVidia A6000

- NVidia A100

- Nvidia P100

VMWare GPU support may require extra licensing from both VMWare and Nvidia. Please check this with your current integrator.

For Proxmox, only direct GPU passthrough is currently supported.

Platform Components

The list below is a non-exclusive overview of the components being used by ScaiGrid. ScaiLabs' ScaiGrid environment is constantly being maintained, as of such, versions listed here may be slightly out-of-date.

- Operating Systems:

- Debian GNU/Linux 12

- Containerization

- Docker Engine runc 25

- Webservices:

- nginx 1.25

- Unicorn 21.2

- Programming and Runtime Environments

- Microsoft .NET Core 8.0

- Python 3.11

- Java SE 17

- Software Libraries

- PyTorch 2.0

- Nvidia CUDA 12.1

- Microsoft Blazor

- Databases:

- PostgreSQL 16

- Redis 7.0

- RabbitMQ 3.13

- Weaviate 1.24

- Neo4J 5.19